We have a lot to do with binary classification in everyday life. For example, there are dogs and cats, 100 won coins and 500 won coins, and iPhone and Samsung Galaxy phones. This time, I’m going to classify a movie review. Binary classification is considered to be the most widely used in machine learning. Let’s separate the reviews into positive or negative based on the review text.

It will proceed in the following order.

- Download dataset

- Data preprocessing

- Model Creation

- Training verification

- Predicting new data with a trained model

Download dataset : IMDB dataset

IMDB stands for Internet Movie Database, which consists of 50,000 reviews from the extremes. It is divided into 25,000 training data and 25,000 test data, each consisting of 50% positive and 50% negative.

It is not possible to train and test machine learning models on the same dataset, because the fact that the model works well on the training data does not guarantee good performance even when new data comes in. The important thing is how accurately you predict when new data comes in.

The IMDB dataset is included in Keras. Let’s import it.

import keras from keras.datasets import imdb (train_data, train_labels), (test_data, test_labels) = imdb.load_data(num_words=10000)

The parameter num_words = 10000 means that we will only use 10,000 words that appear most often in the training data. I will ignore rare words. This will give you adequately sized vector data.

The variables train_data and test_data are a list of reviews. Each review is a list of word indices (word sequences are encoded). train_labels and test_labels are a list of zeros representing negative and ones representing positive:

Data preprocessing

You cannot inject a list of numbers into a neural network. There are two ways to turn a list into a tensor:

Add padding to the list to be the same length and convert it to an integer tensor of size (samples, sequence_length). The layer that can handle this integer tensor is then used as the first layer of the neural network.

Encode the list and convert it to vectors of 0 and 1. For example, convert the sequence [3, 5] to a 10,000-dimensional vector with positions 1 and 3 of index 3 and 0 otherwise. The Dense layer, which can then handle floating-point vector data, is used as the first layer in the neural network.

The second method is used here.

import numpy as np

def vectorize_sequences(sequences, dimension=10000):

results = np.zeros((len(sequences), dimension))

for i, sequence in enumerate(sequences):

results[i, sequence] = 1.

return results

x_train = vectorize_sequences(train_data)

x_test = vectorize_sequences(test_data)

Turn the label into a vector.

y_train = np.asarray(train_labels).astype('float32')

y_test = np.asarray(test_labels).astype('float32')

Model Creation

The input data is a vector and the label is a scalar (1 or 0).

The parameter (16) passed to the Dense layer is the number of hidden units. A hidden unit becomes a dimension in the expression space represented by the layer.

When building the Dense layer, two important structural decisions are needed:

Two hidden layers with 16 hidden units

The hidden layer in the middle of the third layer, which outputs the emotion of the current review as a prediction of the scalar value, uses relu as the activation function and the last layer is a probability (a score between 0 and 1, which sample is likely to be the target ‘1’) High means the sigmoid activation function is used to output that review is likely to be positive). relu is a function that makes negative numbers zero. Sigmoid compresses an arbitrary value between [0, 1], so the output value can be interpreted as a probability.

from keras import models from keras import layers model = models.Sequential() model.add(layers.Dense(16, activation='relu', input_shape=(10000,))) model.add(layers.Dense(16, activation='relu')) model.add(layers.Dense(1, activation='sigmoid'))

Finally, you need to choose a loss function and an optimizer. Since it is a binary classification problem and the output of the neural network is probability (I put a layer of one unit using the sigmoid activation function at the end of the network), binary_crossentropy loss is suitable. This function is not the only choice, you can also use mean_squared_error for example. Cross-entropy is the best choice when using models that output probability. Cross-entropy is a concept from the field of information theory that measures the difference between probability distributions. Here we measure between the original distribution and the predicted distribution.

Following are the steps to setup the model with the rmsprop optimizer and binary_crossentropy loss function. During training, we will use accuracy to monitor.

model.compile(optimizer='rmsprop',

loss='binary_crossentropy',

metrics=['accuracy'])

Since Keras includes rmsprop, binary_crossentropy, accuracy, it is possible to specify the optimizer, loss function, and metrics as strings. Occasionally, you may need to change the parameters of the optimizer or pass your own loss and measurement functions. In the former case, you can create an object yourself using the optimizer Python class and pass it to the optimizer parameter:

from keras import optimizers

model.compile(optimizer=optimizers.RMSprop(lr=0.001),

loss='binary_crossentropy',

metrics=['accuracy'])

from keras import losses

from keras import metrics

model.compile(optimizer=optimizers.RMSprop(lr=0.001),

loss=losses.binary_crossentropy,

metrics=[metrics.binary_accuracy])

model.compile(optimizer='rmsprop',

loss='binary_crossentropy',

metrics=['accuracy'])

Training verification

To measure the accuracy of the model for the first data seen during training, we need to create a validation set by taking 10,000 samples from the original training data:

x_val = x_train[:10000] partial_x_train = x_train[10000:] y_val = y_train[:10000] partial_y_train = y_train[10000:]

history = model.fit(partial_x_train,

partial_y_train,

epochs=20,

batch_size=512,

validation_data=(x_val, y_val))

Predicting new data with a trained model

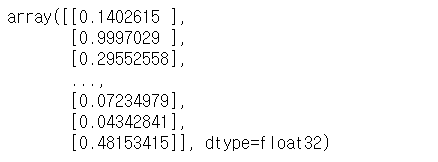

model.predict(x_test)

Like!! Really appreciate you sharing this blog post.Really thank you! Keep writing.