Machine learning blind spots

In 2015, there was a controversy when Google AI recognized black people as gorillas. This shows the blind spot of AI. Humans can distinguish between chicken and poodle, but because the machine separates the image with an algorithm, the pictures below can be recognized as the same picture. Similar examples include images of poodles and muffins, welsh corgi and bread.

Uncertainty

The most important key point here is uncertainty. For example, when deep learning is applied to autonomous driving or medical fields, a major accident may occur if uncertainty is not determined. If the patient has cancer and the accuracy of the prediction model is 90%, it can be determined that it is not cancer with a 90% probability. However, if you mistakenly judge that it is not cancer with a 10% chance, the problem becomes serious.

Bayesian Neural Networks

Here, the goal of estimating the uncertainty for this prediction is the Bayesian Neural Network. Bayesian neural networks can estimate predicted values as distributions.

The distribution is assumed for the parameters of the model, and the probability is estimated using our previous knowledge and data. The Bayesian linear regression equation is as follows.

?=??+? ?~?(0,?^-1Ip), ?~?(0,?2)

Bayesian neural networks derive different predicted values for the same input value. The method of estimating the distribution of the Bayesian neural network assumes an arbitrary distribution and approximates it similarly to the posterior.

Drop out

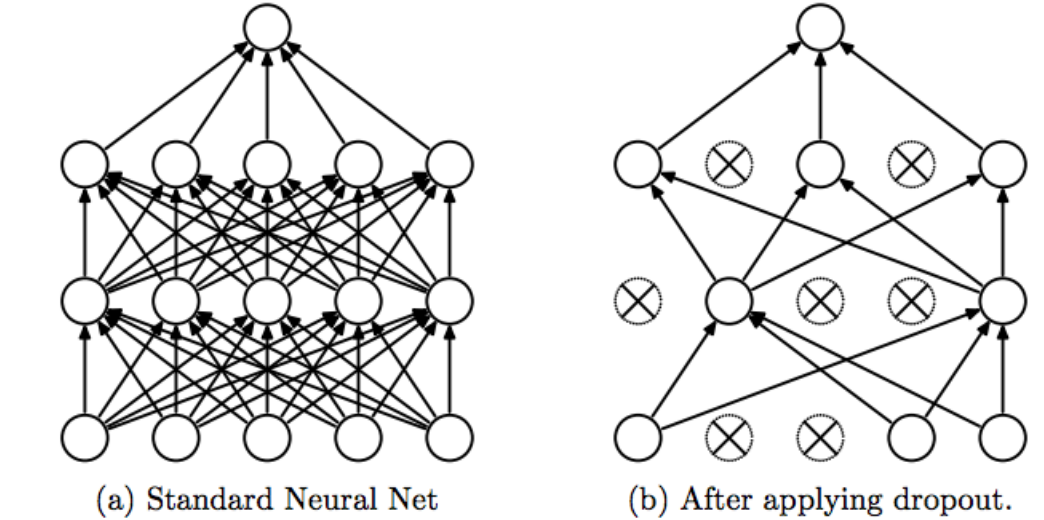

Dropout is a method of model normalization, which reduces uncertainty by randomly disconnecting nodes for each mini-batch.

For deep learning algorithms that do not use dropout, parameters are fixed in the inference stage after learning. However, in the case of a deep learning algorithm using dropout, a fixed parameter is multiplied by a weight in the inference stage after learning. Drop out is applied not only in the learning process of the model, but also in the inference stage.

Next time, I will add the details of the loss function and a later explanation.